Intonation Incantation

CPSC 581 - Assignment 1

I love conversations. Talking with people is one of my favorite ways to pass time, but messaging apps just don't cut it when comes to having a conversation with people. Text only captures the words of conversation, but how we say things matters! A simple "right" could be excited or questioning or even mad, but without the context of someone's tone it's impossible to tell the difference.

That's where Intonation Incantation comes in!

Intonation Incantation seeks to

add back tonality to messages. Using a D3.js front-end and a Node.js with PostgreSQL back-end, Intonation Incantation takes disembodied intonations and maps those to visual variables to give a sense of the tonality of the sender.

Video Demo

How Does It Work

Starting the App

When you start Intonation Incantation, you'll need to enter a host code. The host code allows you to connect to all other users using the same host code. You'll be able to see their messages and send messages back!

Sending your Message

After you've typed up that message, wait up before sending it! Tap the Tone button once to begin recording the tone you want to add to the message. The system takes in up to two rising, falling or neutral in tone sounds. It will then map those to visual variables to convey your tone! Tap the tone button again to end the recording and you'll be greeted with an emoji that represents what tone the system thinks you're trying to convey. Now send away!

Need More Control?

You can switch into Pro Mode using the Pro Mode button. In Pro Mode, the system accepts sets of 2 or 3 music notes divided by silence. It then maps those notes to a tone using both whether those notes are falling, rise or neutral and whether the interval between individual notes is a major, minor, perfect or diminished/augmented interval. This gives you even more room to add nuance to your message! The tone button also gives you an indication what intervals it hears you play.

There's lots to Explore!

Pro Mode has over 80 different combinations of animation, color and other visual variables to mapped, so there's lots of sound combinations to try out! If you're ever lost, just tap on the info button for more help!

Design Process

Initial Sketches

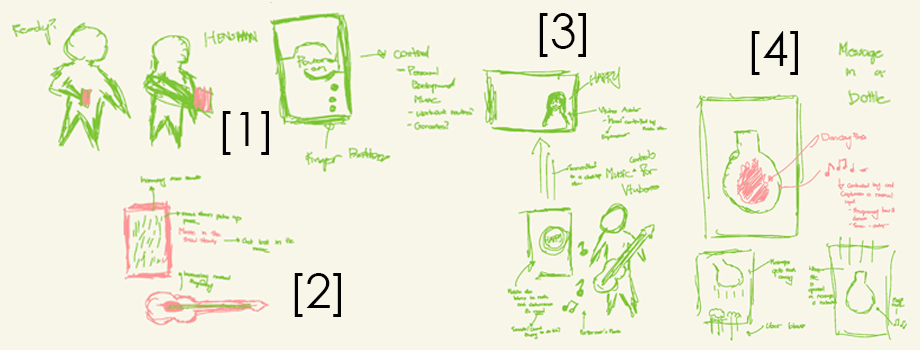

My intial sketches took in a number of different directions. For example, many of my early ideas took the form of wondering how I could use a guitar (the only instrument I can play) to control different things. We can see this in [2,3,8]. In [2], I wonder how I could emphasis the meditative nature of playing an instrument with an app that played increasingly loud background noises like rain or snow that blocks off the rest of the world when you're deep in thought. In [3], I explored how an instrument could be used to control a virtual livestreaming avatar while the streamer performs. And in [8], I wondered how a guitar could be used to play a maze like game. Another theme in these initial sketches are interactions to assist creativity like [7] where sound and the mobile device orientation are used control a tablet pen or [9] where ambient sounds are used to collect a color palette. [1,11] explore the idea of using a Tokusatsu style transformation motion to control your phone. [10] takes a different direction and reports on the status of a 3D printer using sound.

Even in these intial sketches, you can see the theme of communication come up. For example [4] explores using sounds to leave messages to others, [5] explores how to design interaction around the notion of a wordless conversation and [6] is an early version of Intonation Incantantions

Refining Intonation Incantation

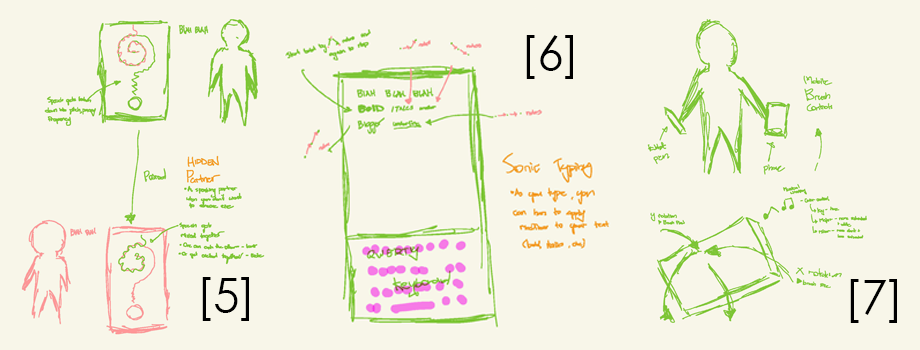

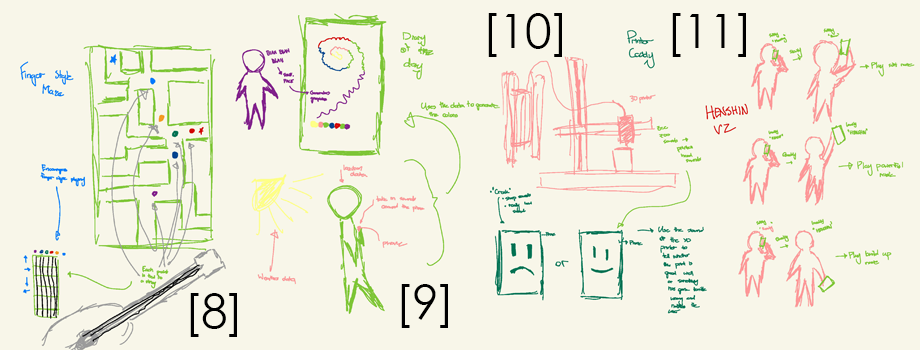

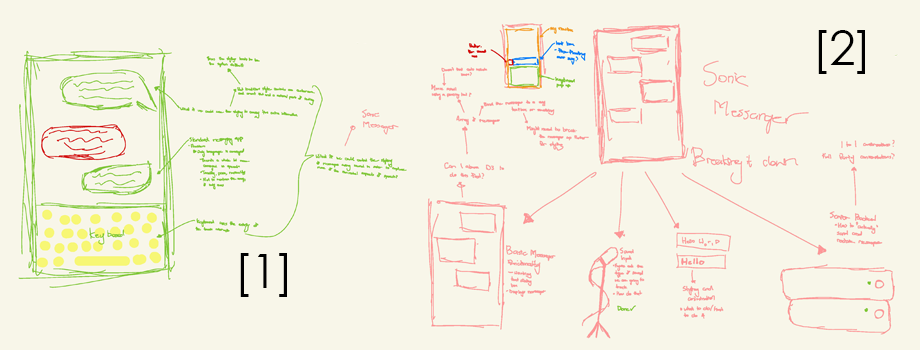

These two sketches show the first incarnation of the system with [1] showing off the general idea of a messaging app that is given context using sound and [2] started to take that initial idea and turn it into something that's feasible to implement by breaking down what would need to be implemented.

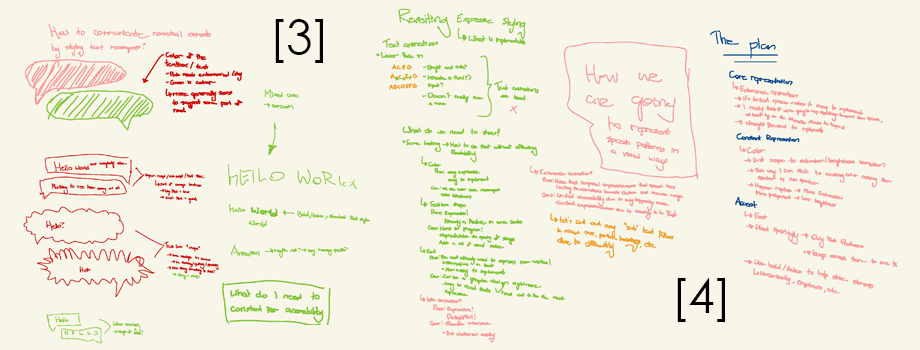

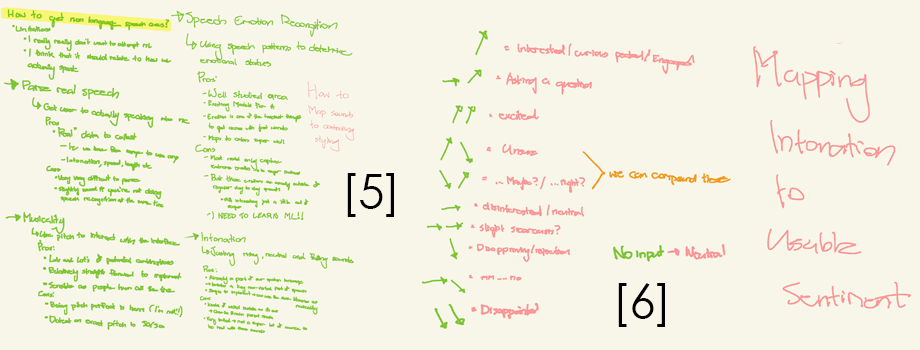

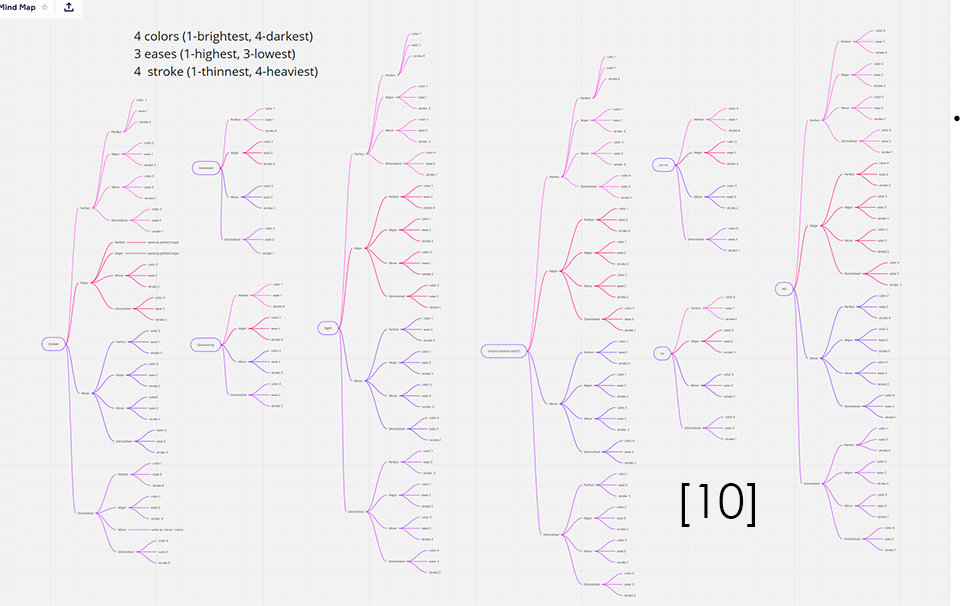

[3,4] are two different brainstorming session where I debated over different visual variables I could use to encode information in a messaging style app. While [3] explored generally different visual variables, [4] honed in onto what was feasible to implement

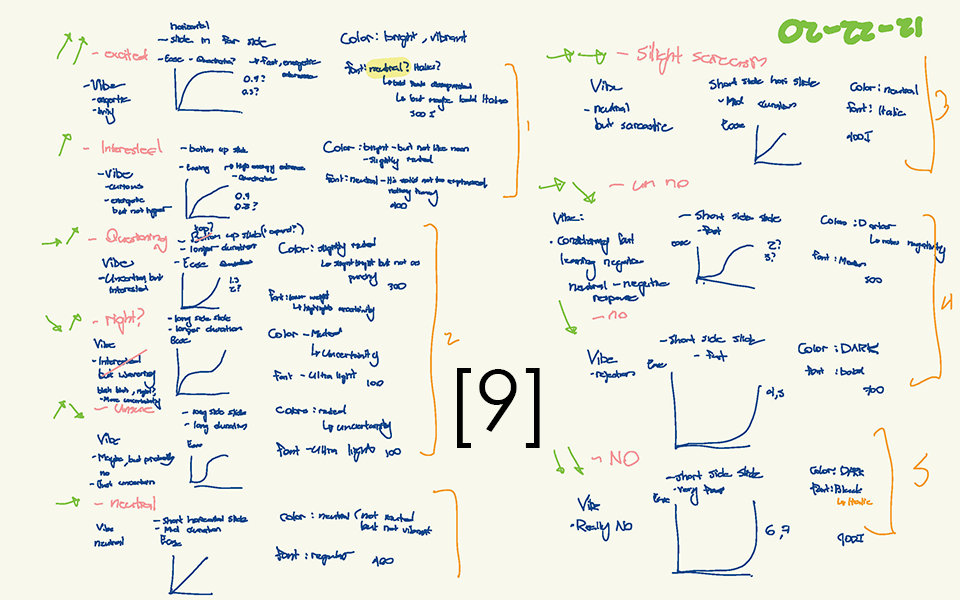

In [5] I brainstormed all the different elements of speech and sound that I could think of that could be used to encode information about the speech and emotion of the sender. Then in [6] having decided on intonation, I started to map intonation to more general "vibes" of the tone pattern that I could use to encode data

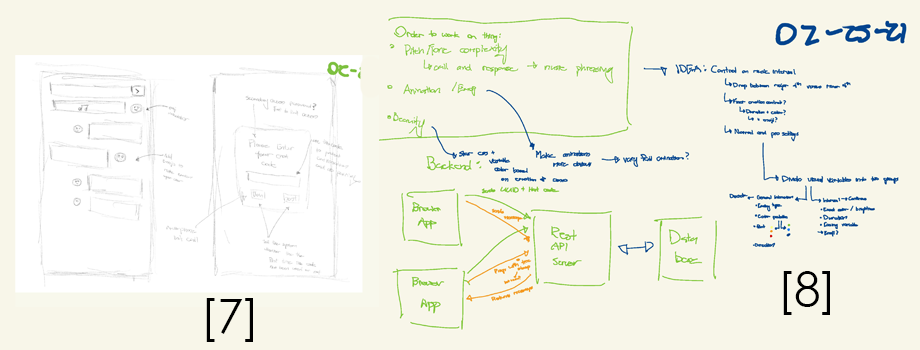

In [7,8] I consider some different feedback from the assignment check in and a meeting with Micheal. [7] explores an idea of incorporating emojis into the messages to make the emotion more clear as well as an interface for working for implementing the ability to connect to others on the site. [8] starts to think about using musical intervals and a quick sketch of how the back-end will work

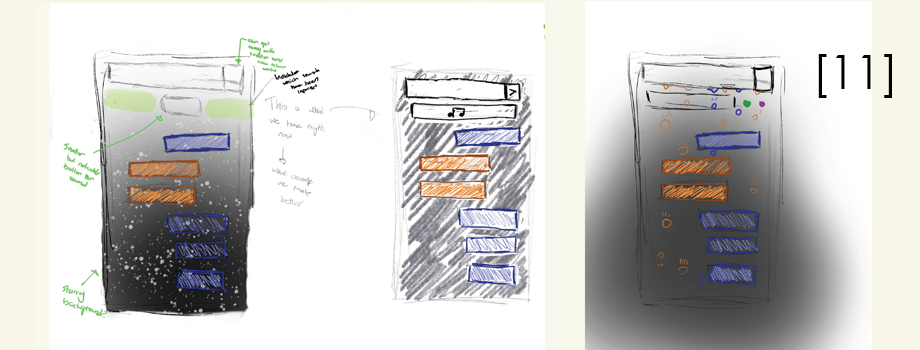

In [9,10] I sketch out the particular mappings from intonation/musical intervals that I was intending to implement. And then finally in [11], I sketch out some ideas about refining the aesthetics of the project